Integrating Ollama into Live Helper Chat with Tool Calls Support

This sample uses the llama3-groq-tool-use model. You can use any other model as well.

The following models are ones I have tried, and in my opinion, are the best:

- hermes3: In my opinion, the best 7b parameter model.

- mistral-nemo: My second choice. 12b parameters.

- llama3-groq-tool-use: The model used in this example.

You will need a working Ollama model and an Ollama server running.

Installation

Important:

- After installation, change the IP address of the running Ollama service.

- For debugging, you can edit the Rest API in the back office and check

Log all requests and their responses as system messages. - Ensure your version includes the changes from this commit.

How to Call a Trigger Based on a Defined Function in Ollama?

Note the defined function in Gemini:

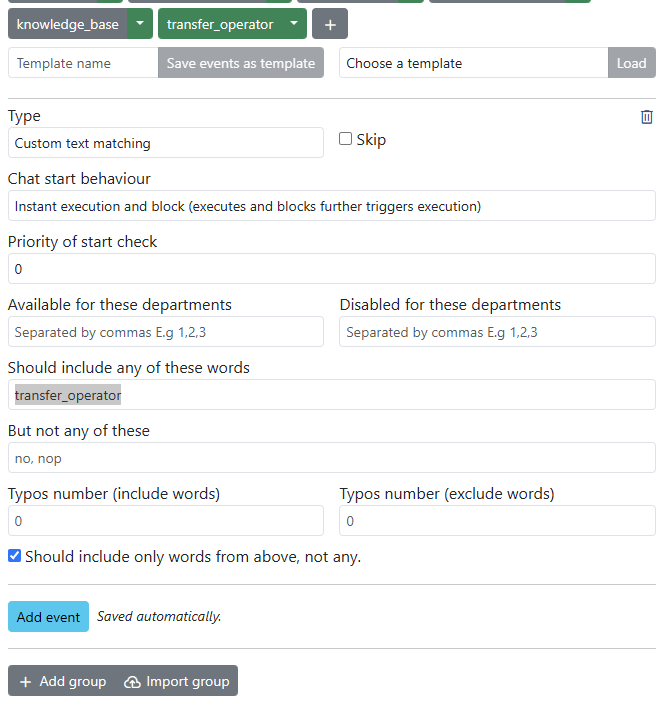

transfer_operator.Add an event to your trigger with the

Typeset toCustom text matching. TheShould include any of these wordsvalue should betransfer_operator.For example:

Forwarding a Port on WSL to Windows

From Ubuntu on Windows WSL:

Append OLLAMA_HOST=0.0.0.0 to the Service directive in /etc/systemd/system/ollama.service.

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin"

Environment="OLLAMA_HOST=0.0.0.0"

[Install]

WantedBy=default.target

Execute the following commands:

service ollama restart

systemctl daemon-reload

From the Windows command line, find the IP address of the WSL layer:

C:\Users\remdex>wsl.exe hostname -I

172.29.52.196 172.17.0.1

Edit the Windows hosts file (C:\Windows\System32\drivers\etc\hosts) and add the following line:

172.29.52.196 wsl

Forward the port from WSL to Windows:

netsh interface portproxy add v4tov4 listenport=11434 listenaddress=0.0.0.0 connectport=11434 connectaddress=wsl

Now you can access the Ollama service from Windows at http://your-pc-ip:11434/.